A key engineering responsibility at Starburst is on performance enhancements. One is to reduce the amount of time that a CPU has to work on a given query, also known as CPU time. As far as concurrent queries are concerned, CPU time is a stable metric that reflects real performance.

When CPU time for an individual query drops, so can Trino’s ability to utilize the CPUs fully. This can be due to scheduling. Note that CPU time reduction does not always translate to a reduction in latency or wall time. After a year of major performance enhancements, we decided to focus our efforts on increasing CPU utilization and reducing query wall time.

Increasing CPU utilization

The most significant change is that Trino’s query.execution-policy now defaults to phased rather than all-at-once. The all-at-once approach scheduled all query stages in a single shot with the goal of simplicity and reduced latency. The phased execution policy was later added as a configuration option that would schedule only the stages of a query that can make progress.

Recently, Starburst Co-founder and Trino Committer Karol Sobczak observed that the phased execution policy can schedule stages that can create subsequent stages which can’t make progress. That issue defeats the purpose of the phased execution policy. Fixing this logic resulted in reduced latency, and the ability to set the phased execution policy as default.

Additional performance enhancements

Other significant changes include adaptively setting task.concurrency to the number of physical cores on a node and increasing the default value of hive.split-loader-concurrency. Our observation is that hyper-threaded cores do not translate to improved query performance. Instead, increasing the split loader concurrency assists the engine process partitions and small files more quickly.

Benchmark metrics

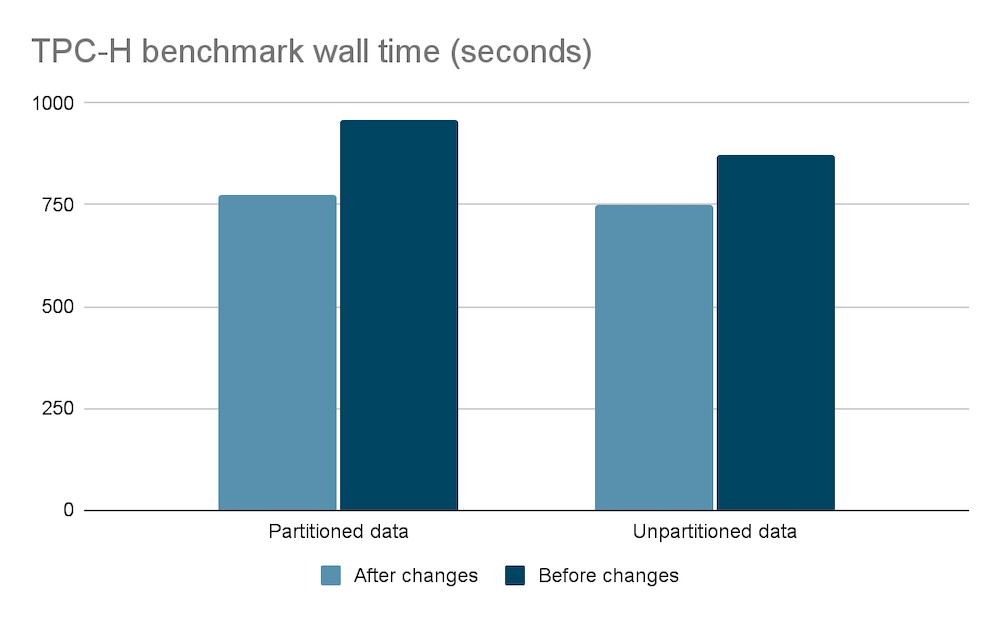

With a 20% reduction in wall time for TPC-H partitioned data, customers can expect an average reduction of 13% in wall time based on our internal benchmarking. We have seen improvements as high as 50% for TPC-H query 12 on partitioned data.

The benchmark results were obtained by running the TPC-H and TPC-DS benchmarks with one coordinator and six worker nodes. The data was queried by the Hive connector with partitioned and unpartitioned data at a 1TB scale.

While TPC-H and TPC-DS are both decision-supporting benchmarks, TPC-H is more an agent of ad hoc queries, which tend to be simpler.

Available out of the box

The best part about our enhancements is that they do not require action on your end. They’re available out of the box in the upcoming LTS release. However, remember that the software makes use of existing configurations, so you might need to unset query.execution-policy and task.concurrency.

Sit, relax and savor the faster query processing.